You’re hiring for a new role in your company. You want to choose the best candidate, but you also want to make sure that everyone—no matter their background—has a fair shot. The challenge is that traditional tests, like IQ or math exams, often create an uneven playing field. This is known as the diversity-validity dilemma: how do we select the right people using accurate tests, while ensuring fairness across different ethnic and cultural groups?

This article explains strategies suggested by researchers Britt De Soete, Filip Lievens, and Celina Druart (2013) to balance fairness and validity in personnel selection, by addressing the diversity-validity dilemma. We’ll break down these scientific findings and look at how game-based assessments (GBAs), and non-verbal tests can be part of the solution to this ongoing challenge.

1. Cognitive Ability Measures: Moving Beyond Traditional Tests

One of the key problems in the diversity-validity dilemma is that traditional cognitive tests—such as verbal reasoning or IQ tests—are influenced by education and cultural background. These tests tend to show significant performance gaps between groups, especially between ethnic majority and minority candidates.

Research recommends exploring non-verbal cognitive assessments as an alternative. Raven’s Progressive Matrices, for example, is a non-verbal test that measures problem-solving ability through visual puzzles. This test has been successfully used in cross-cultural contexts because it minimizes language and cultural bias. By asking candidates to solve patterns or puzzles rather than answer verbal questions, it levels the playing field.

Game-based assessments (GBAs) are a modern evolution of this idea. In GBAs, candidates are given complex, logic-based tasks presented in a game format. These tasks engage reasoning and abstract thinking, rather than relying on language or culturally specific knowledge. This makes GBAs naturally more inclusive and helps minimize the diversity-validity dilemma and biases seen in traditional testing methods. For instance, a game that tests pattern recognition or decision-making doesn’t require verbal explanations or cultural references, making it fairer for diverse populations.

2. Simulation-Based Assessments: Real-World Skills in Action

Simulations are another tool researchers highlight for creating fairer assessments. Simulations ask candidates to perform tasks that closely resemble what they’ll face on the job, such as handling customer issues or making quick decisions under pressure. These tests assess practical, job-relevant skills, and tend to show smaller performance differences between ethnic groups compared to traditional cognitive tests.

Assessment centers, where candidates role-play scenarios or solve work-related problems, are a well-known example of this. But the latest advancement in this area is gamified simulations. In a gamified simulation, candidates might manage a virtual team or navigate a simulated crisis. These assessments allow for a more realistic evaluation of a person’s problem-solving, risk-taking, and judgment—key aspects your company already measures in its GBAs.

Simulations are powerful because they focus on real-world complexity. When candidates are judged on how they perform in realistic situations, rather than on abstract questions, it reduces the cultural biases that often creep into more traditional tests. This approach accounts for the diversity-validity dilemma. Research shows that simulations with high stimulus fidelity—where the assessment mirrors the real job—are particularly effective in narrowing performance gaps (De Soete et al., 2013).

3. Statistical Approaches for Fairer Scoring: Making Numbers Work for Inclusion

A major focus of the original paper by De Soete et al. (2013) on addressing the diversity-validity dilemma is how different statistical approaches can help reduce bias and improve fairness in assessments. There are several ways to use statistics to ensure that the selection process is both valid (predicting who will succeed on the job) and fair (not discriminating against any group).

One approach is to combine different types of assessments—cognitive, behavioural, and personality-based—in a composite score. This way, no single type of test dominates the result, and candidates are assessed holistically. For instance, cognitive tests might be combined with work simulations and personality assessments to give a fuller picture of a candidate’s abilities. The Pareto-optimal approach, mentioned in the paper, is a method for finding the perfect balance between maximizing job performance prediction and minimizing adverse impact (bias against minority groups).

Predictor weighting is another important tool. This means giving different tests different weights based on how well they predict success on the job. For example, if a cognitive test shows some bias against a minority group but is still important, it can be “balanced” by giving more weight to a non-cognitive test (like a simulation or personality test) that shows less bias. This combination ensures that the final decision isn’t skewed by a single test result.

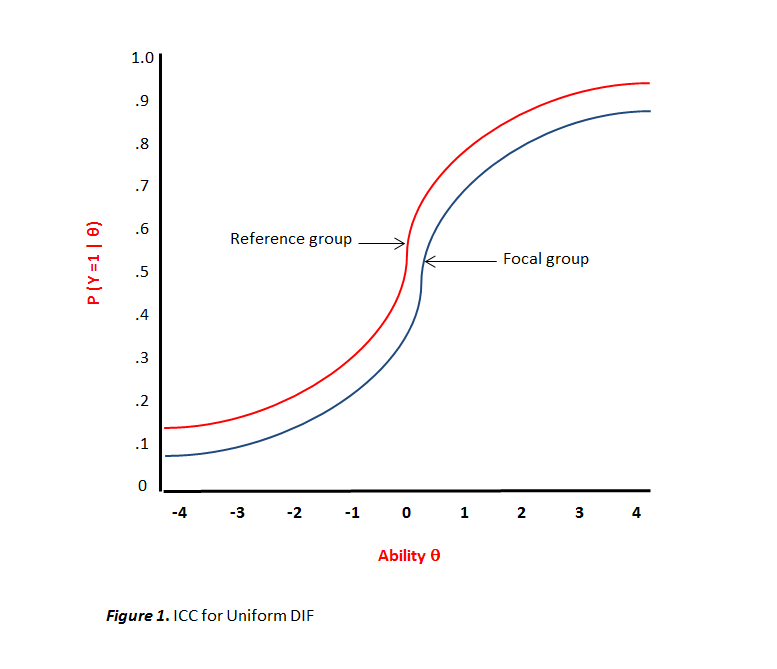

Lately, statistical tools like item response theory (IRT) and differential item functioning (DIF) are being used to detect and correct bias at the level of individual test items. These tools help ensure that no single test item unfairly disadvantages one group over another. For instance, if an item on a cognitive test systematically disadvantages non-native English speakers, IRT and DIF can identify and remove such items, improving the fairness of the overall test.

4. Reducing Criterion-Irrelevant Variance

Researchers also emphasize reducing criterion-irrelevant variance, meaning the parts of a test that don’t actually predict job performance but still influence scores. Often, these irrelevant factors are the root of cultural biases in assessments. For example, a test that requires advanced language skills may disadvantage candidates who aren’t native speakers, even if those language skills aren’t relevant to the job.

This is where non-verbal assessments shine. Tests like Raven’s Progressive Matrices or Cattell’s Culture Fair Intelligence Test measure reasoning ability through visual and spatial tasks, eliminating the need for language or culturally specific knowledge. These assessments are particularly helpful for hiring in global or multilingual contexts because they focus purely on cognitive ability, reducing the impact of irrelevant cultural factors.

Game-based assessments can also reduce this irrelevant variance by using interactive, non-verbal scenarios. For instance, a game that challenges candidates to solve puzzles or navigate virtual environments can effectively measure problem-solving without relying on language or culturally loaded references. This makes it more inclusive for candidates from various backgrounds and cultures, challenging the diversity-validity dilemma.

5. Enhancing Candidate Experience

When candidates feel that an assessment is fair and relevant to the job, they perform better. This is particularly true for ethnic minority candidates, who may be more sensitive to tests that feel biased or disconnected from the job they are applying for. Ellison, L. J, et. al (2020) mention that test-taker perceptions—how fair and valid candidates feel the test is—play a crucial role in their performance.

Game-based assessments have a clear advantage here. Games are engaging, interactive, and feel less like a traditional exam. Candidates are often more motivated when they feel they are being tested in a context that mirrors real-life challenges. This improves their experience and reduces stereotype threat—the fear of confirming negative stereotypes about their group. Reducing this threat is critical, as it can lead to better performance, particularly among minority candidates who might otherwise feel disadvantaged.

How PerspectAI’s Assessments Challenge the Diversity-Validity Dilemma

At PerspectAI, we understand the importance of building assessments grounded in well-established psychological research. Our game-based assessments (GBAs) take inspiration from classic non-verbal cognitive tests that have proven to be both valid and fair across diverse populations. By adapting these traditional tests into engaging, interactive formats, we bridge the gap between scientific theory and real-world application. Our approach thus addresses the diversity-validity dilemma, making cognitive testing more inclusive, accessible, and engaging.

PerspectAI’s Non-Verbal Assessments

Some of our gamified assessments inspired by traditional non-verbal cognitive assessments include –

- Working Memory: Based on the Corsi Block Test

The Corsi Block Test is a widely used non-verbal test that measures working memory by asking participants to recall the sequence of blocks that light up. We’ve adapted this into an interactive game format where users engage in increasingly complex visual memory tasks. This maintains the test’s core scientific foundation while making it more dynamic and user-friendly. - Abstract Reasoning: Using Raven’s Progressive Matrices

To measure abstract reasoning, we incorporate elements from the Raven’s Progressive Matrices, a well-regarded non-verbal test that evaluates a person’s ability to recognize patterns and solve visual puzzles. By transforming this into a game where candidates identify missing pieces in visual grids, we measure abstract thinking without the cultural biases found in verbal tests. - Problem-Solving: Using the Tower of Hanoi Task

Our problem-solving game is inspired by the Tower of Hanoi, a puzzle that challenges players to move disks between pegs following specific rules. This classic cognitive task assesses strategic thinking, planning, and problem-solving abilities. Our version replicates this structure in an engaging virtual environment, making the task feel more like a puzzle-solving adventure than a test. - Cognitive Flexibility: Based on the Color-Shape Switch Task

To measure cognitive flexibility—the ability to switch between thinking about different concepts—we use a task based on the Color-Shape Switch Task. This classic test asks participants to switch between sorting shapes by either color or form, testing their ability to adapt to new rules. Our game version challenges players to alternate between different sorting tasks in real time, providing a fun, yet scientifically grounded, way to measure cognitive flexibility.

Why These Tests Matter

- Fairness and Accessibility: These non-verbal tests have a long history of being used across cultures because they minimize the need for language and cultural knowledge, making them fairer for diverse populations.

- Scientific Rigor: Each of the traditional tests we base our games on is backed by decades of psychological research, ensuring our games are not just engaging but also psychometrically sound – thus making them valid, reliable, and consistent across different populations.

- Real-World Application: By turning these classic tests into games, we enhance candidate engagement and make the assessment process more interactive, without losing the scientific accuracy that makes them effective in predicting job performance.

At PerspectAI, we’ve taken the best of cognitive psychology and created accessible, game-based tools that meet the needs of modern workplaces while maintaining the fairness and scientific rigor of traditional testing methods.

Addressing the diversity-validity dilemma requires a mix of approaches, but the solutions are clear: assessments need to be fair, culturally inclusive, and scientifically valid. Game-based assessments, with their focus on non-verbal problem-solving, real-world simulations, and positive candidate experiences, offer a promising path forward. By combining these innovative tools with smart statistical approaches and fair testing principles, companies can create hiring processes that not only select the best candidates but do so in a way that promotes fairness and inclusion for all.

Further Reading:

- De Soete, B., Lievens, F., & Druart, C. (2013). Strategies for dealing with the diversity-validity dilemma in personnel selection: Where are we and where should we go?. Revista de Psicología del Trabajo y de las Organizaciones, 29(1), 3-12.

- Ellison, L. J., McClure Johnson, T., Tomczak, D., Siemsen, A., & Gonzalez, M. F. (2020). Game on! Exploring reactions to game-based selection assessments. Journal of Managerial Psychology, 35(4), 241-254.

- O’Leary, R. S., Forsman, J. W., & Isaacson, J. A. (2017). The role of simulation exercises in selection. The Wiley Blackwell handbook of the psychology of recruitment, selection and employee retention, 247-270.

- Rottman, C., Gardner, C., Liff, J., Mondragon, N., & Zuloaga, L. (2023). New strategies for addressing the diversity–validity dilemma with big data. Journal of Applied Psychology, 108(9), 1425.

- Thornton III, G. C., & Gibbons, A. M. (2009). Validity of assessment centers for personnel selection. Human Resource Management Review, 19(3), 169-187.